Object counting AI looks deceptively simple. Count the items in an image, return a number, move on. In controlled demos, it often works. In real operational environments, it usually doesn’t.

Once images come from warehouses, sites, facilities, or logistics workflows, the assumptions behind most object counting systems fall apart. Objects overlap, stack, partially disappear from view, vary in size, and sit inside cluttered scenes captured from imperfect angles. As counts increase, accuracy drops sharply - and trust in the result disappears.

This gap between demo performance and real-world usability is why object counting has struggled to move from experimentation into daily operations.

A key reason for this gap is that object counting is often treated as a by-product of object detection.

Object detection answers what is in an image and where it appears. Object counting answers a harder question: how many are actually present, reliably and consistently. This distinction is explored further in our Object Counting AI overview. Detection accuracy can remain acceptable while counting accuracy collapses - especially in dense or overlapping scenes.

In practice, teams don’t just need to know that objects exist. They need confidence that the count reflects reality, because counts drive decisions such as stock checks, site readiness, compliance, and verification.

Most object counting systems struggle for the same structural reasons:

When teams can’t see why a number was produced, they revert to manual checks. At that point, automation adds friction instead of removing it.

For object counting to work in production, it needs to meet a different set of requirements than most legacy approaches were designed for.

Reliable counting systems must:

These principles are built into our Object Counting AI and Object Counter Vision Agent.

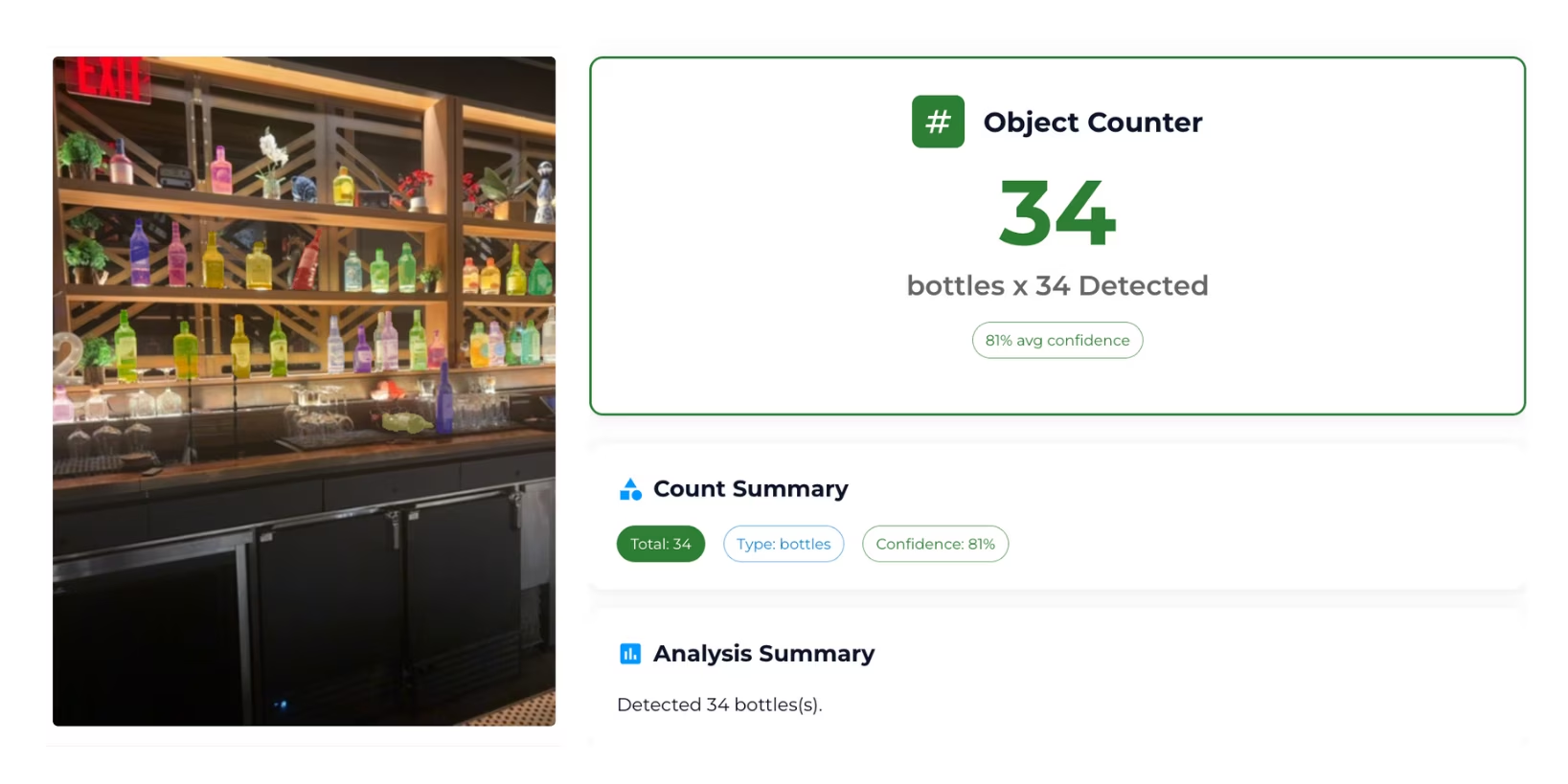

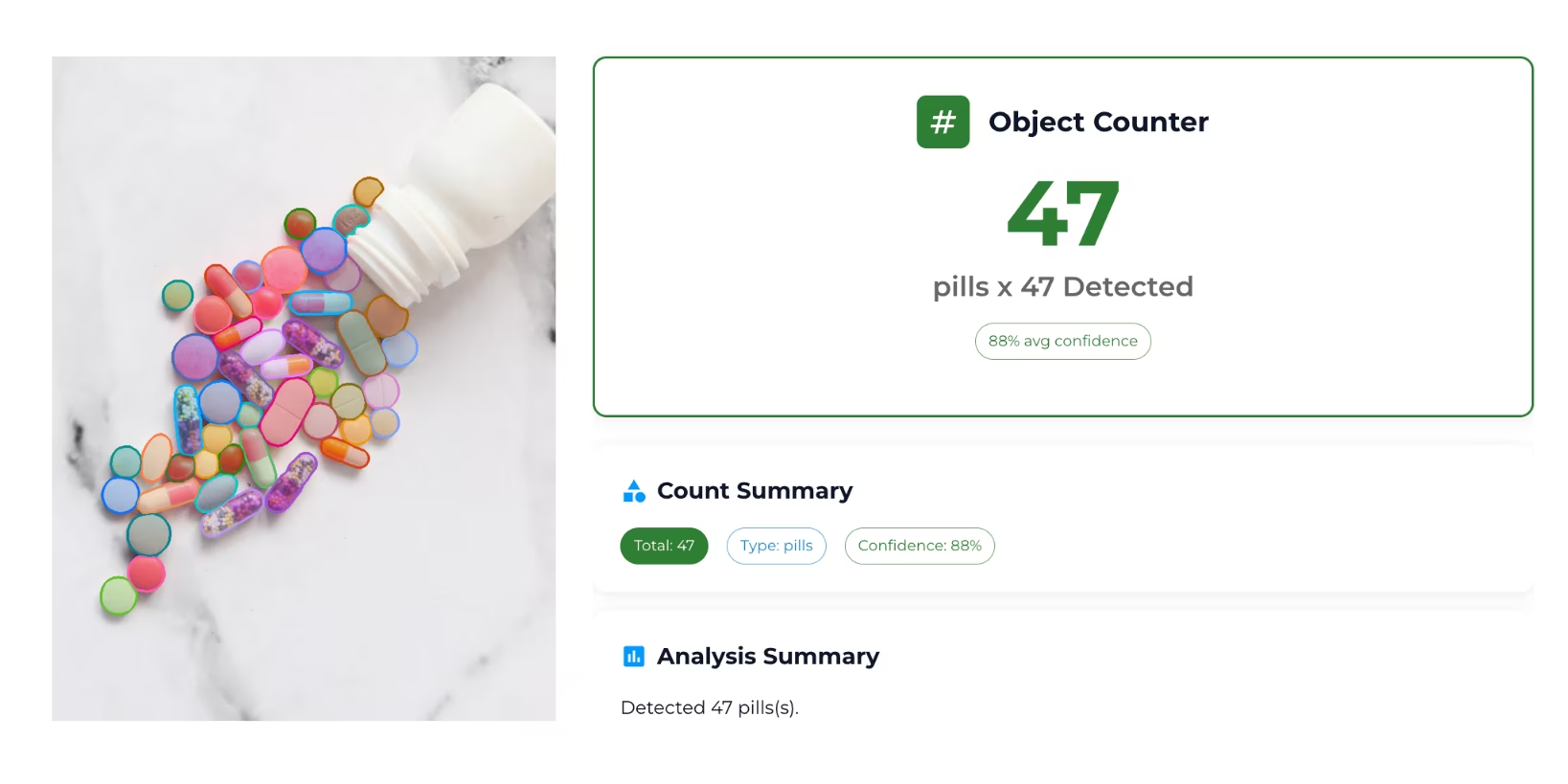

The last point is critical. Visual results turn object counting from a black box into something teams can trust, audit, and act on.

Recent advances in Vision AI have shifted how object counting is implemented. Instead of producing a single number, modern systems increasingly show what was counted directly in the image.

This allows users to:

This shift is what makes object counting usable outside of demos.

When accuracy and reviewability are designed in from the start, object counting becomes a practical tool across many environments:

In these scenarios, the count itself matters - but the ability to trust the count matters more.

Object counting works best when it isn’t isolated. Combined with other visual capabilities, it becomes part of a larger decision-making flow.

Modern Object Counting AI systems increasingly combine counting with other Vision Agents such as object validation, size estimation, and structured data extraction.

This reflects a broader shift in Vision AI: moving away from single-purpose models toward Vision Agents that support real operational decisions.

Object counting is finally crossing the line from “interesting demo” to “reliable operational tool” - not because the problem got simpler, but because the approach got smarter.

![[team] image of an individual team member (for a space tech)](https://cdn.prod.website-files.com/image-generation-assets/311f45a5-a97b-4d70-a2bf-245f1e3da7f5.avif)